Thanks: 0

Likes: 0

Dislikes: 0

-

Super Member

How to maximize your use of photo technology (Part 1 - History)

How to maximize your use of photo technology (Part 1 - History)

Technology marches forward unevenly. Currently, the camera manufacturers are engaged in a "megapixel war", trying to achieve increased market share by convincing everyone that they have the most megapixels on the APS-C or full frame chips.

This part of the technology has out-run all of the other components.

When is it good enough?

No matter how our technology advances, it slows down or stops when it begins to exceed our analog human capability to utilize the information.

Digitalization of Audio

We went through many generations of audio technology before arriving at a digital way to store sound.

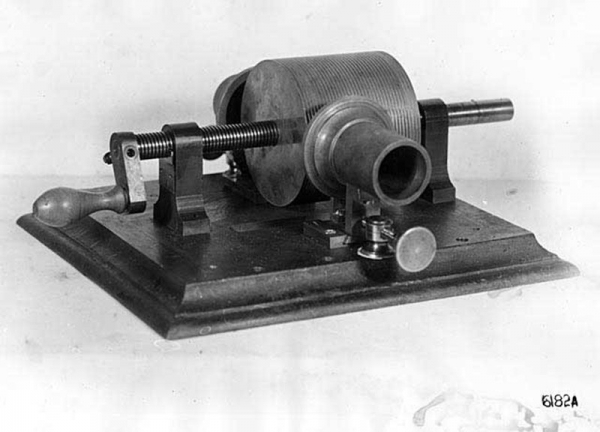

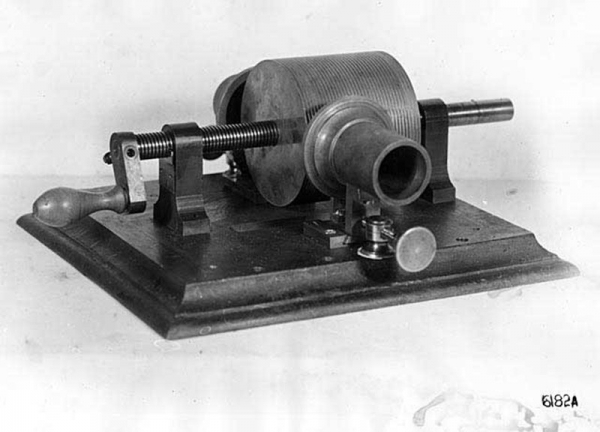

Here's Thomas A Edison's first phonograph.

We moved through 78 and 45 rpm records, and on to 33 1/3 LPs. The formats continued to change, and the ability to reproduce sound continued to improve.

The early 1980s saw the release of the Compact Disc, which changed the recording medium from analog to digital. The industry moved almost overnight. The massive increase in audio quality made almost everyone decide to change.

Once the change to digital technology was completed, the recording and sampling rate haven't really moved much since the original standardization of the CD technology. Why not? Because it was "good enough". Increases beyond that standard cause an increase in cost, but the human ear can't really detect the difference.

You can argue the point of multi-channel stereo, but that really doesn't change the sampling or recording rate, just the number of them being done simultaneously.

Once the technology exceeded the ability of our (analog) human ears, there was no economic justification to continue to raise the playback rate. The move to digital technology was a "game changer".

In the case of audio technology, the initial implementation of digital was good enough to cause the game change.

The digitalization of Images

When the industry's attention turned to cameras and video players, the digital technology wasn't yet good enough (how fast and how much data could be recorded) to outdo the state of the art in analog technology.

For the purposes of this discussion, we're going to focus on still photography.

The early days of the digital camera didn't produce very good pictures. Every year saw advances not only in the CCD chips, but also the size and speed of the digital storage (Compact Flash, SD cards, etc.)

Because the digital technology wasn't up to competing with analog film technology (film cameras were recording images at somewhere between the equivalent of 8 and 16 megapixels, depending on the quality of the camera and film), every manufacturer had to advance their piece of the technology.

As a result, we're now watching an ever escalating race between the components of the technology.

What the manufacturers forgot was the ability of the human eye to digest that information. The technology ran over our human (analog) capability to look at images years ago.

Television existed for decades with the equivalent of 640 x 480 (VGA). We finally upped the standard to 1080p (HD). I know we've been led to believe that TVs are digital. But, guess what? The act of converting those bits and bytes to a visible screen changes the information to analog.

What does 1080p actually mean? 1080 lines of vertical resolution on the screen. To match those numbers with horizontal numbers at the same resolution is just dependent on the width of the screen that you are looking at.

At 1080, the human eye can no longer detect the individual pixels that make up the screen from point blank range. Now, the only reason you might want to advance beyond that is to create an image that's 20 feet high. In order to view that image, you have to stand a long ways away, which negates the need for higher resolution. Human eye limitations, again.

OK, enough of the history. Part 2 will talk about how to use the technology effectively.

Jim

Similar Threads

-

By Mike Phillips in forum How to articles

Replies: 61

Last Post: 01-30-2022, 12:05 AM

-

By Joe@Superior Shine in forum Show N' Shine

Replies: 30

Last Post: 03-23-2018, 09:58 AM

-

By Mike Phillips in forum How to articles

Replies: 41

Last Post: 05-29-2014, 09:51 AM

-

By Hoytman in forum Auto Detailing 101

Replies: 2

Last Post: 12-18-2013, 02:09 PM

-

By WRXINXS in forum Tips, Techniques and How-to Articles for Interacting on Discussion Forums

Replies: 4

Last Post: 02-09-2013, 09:51 PM

Members who have read this thread: 0

Members who have read this thread: 0

There are no members to list at the moment.

Posting Permissions

Posting Permissions

- You may not post new threads

- You may not post replies

- You may not post attachments

- You may not edit your posts

-

Forum Rules

|

| S |

M |

T |

W |

T |

F |

S |

| 28 | 29 | 30 |

1

|

2

|

3

|

4

|

|

5

|

6

|

7

|

8

|

9

|

10

|

11

|

|

12

|

13

|

14

|

15

|

16

|

17

|

18

|

|

19

|

20

|

21

|

22

|

23

|

24

|

25

|

|

26

|

27

|

28

|

29

|

30

|

31

| 1 |

|

Thanks:

Thanks:  Likes:

Likes:  Dislikes:

Dislikes:

Reply With Quote

Reply With Quote

Bookmarks